Contents

NFS Traffic From Tools

Builds can run many tools in parallel. Lots of users can each run builds with many parallel tools. All that I/O traffic has to go through the repository server.

It would be preferable to distribute it. Each RunToolServer could be responsible for serving the volatile directories for its own tool runs. This could also make the tool I/O faster by having it serviced locally. However it would create a problem of getting the derived files produced by each tool run back to the central store.

NFS Traffic Reading Build Results

We've recently been having difficulty in this area at Intel. Our builds have tens of thousands of result files. Users often ship a build result as symlinks and then run a series of tests on the build result as batch jobs across a collection of clients. These all reach into the shortid pool and have been putting significant loads on the kernel NFS server. With hundreds of users and thousands of clients running batch jobs using build results, this can reach the point that it degrades the performance of the whole system.

It would be preferable to distribute the load for accessing build results across multiple machines.

Peer-to-peer Shortids

Multiple clients are already reading the files from the server and storing them in their local NFS client cache, but this only helps each individual client. With some work, we could make our own caching layer which makes it possible for clients to serve immutable shortids to each other in a "peer-to-peer" style. This would make the system scale with the number of client hosts running such a caching agent.

If we used this same caching layer with volatile directories, then the client which ran the build step which produced a derived file would already have a copy of that derived file ready to be delivered to a peer. To put it another way, building itself would "seed" the peer-to-peer network.

For more on this see /PeerToPeerDesign.

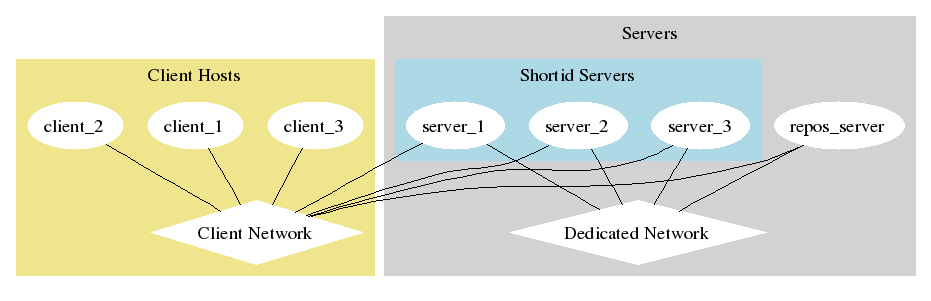

Split Shortid Pool Across Multiple Servers

There is another possible other way to implement load sharing for the shortid pool that requires a fair bit more infrastructure, but less development work. In an environment that already has many different NFS file servers there are a couple of ways the shortids could be split across them:

- Simply use symbolic links for the top layer of the numbered directories off to NFS hard mounts on different file servers. Assuming a fast interconnect between the Vesta server and the NFS servers (ideally a separate dedicated network used only between the repository and the other NFS servers), this might not present too much of a performance hit to the clients. This would require changing the repository's deletion code to not remove empty directories if they happen to be symlinks.

Slightly more involved would be using N bits of the shortid space to segment between different file servers. The repository would still maintain its shortid pool as normal, but an additional SRPC call would be added. When the evaluator is shipping as links it would find which of those remote pools the link target should live in, create the symlink, and check if the target exists. If it's not yet present, it would send an SRPC request to the repository which would copy it to the target in a background thread. (KenSchalk : I don't think we can do that asynchronously in the background as that would mean when the evaluator is done shipping some of the links might point to non-existent targets or files that have only been partially copied.) Then when weeding the repository would delete the sid file from both the central pool and the remote mirror. While this change would be more work, it would allow building to to use the central (presumably fast) sid pool, but still eliminate the load from results shipped as links.

Note that this approach would scale proportional to the number of these separate NFS servers which were introduced.

Related Projects

While the problems and opportunities represented by Vesta's data distribution needs may be somewhat unique, it would be worth investigating other distributed file system designs: